Post-Game Analysis of the IBM Watson

Jeopardy Challenge

by celeste.horner @gmail.com

| The IBM Watson artificial intelligence system achieved a milestone in the field of artificial intelligence and natural language processing by winning the Jeopardy general knowledge competition. [video] Competing in real time while disconnected from the internet, Watson's massively parallel computing resources comprised 90 servers, equivalent to over 6000 personal computers, 200 million pages of information, 15 terabytes of RAM, World Book Encyclopedia, Wikipedia, American Medical Association Enclopedia of Medicine, Dictionary of Cliches, Facts on File Word and Phrase Origins, Internet Movie Database, New York Times archive, and the Bible [PBS Newshour - Miles O'Brien report]. [PBS Nova: The Smartest Machine on Earth] The hard-working programming team at IBM scored a well-earned public-relations coup for their fine company and helped define an industry-leading vision for ubiquitous, intelligent cloud computing. |

|

Google's performance has proved particularly impressive because it cleverly includes singular, plural, tense (invented/invents, open/opens/opened) and synonym (writer=author murder=kill) variations in search results.) and even compensates for misspellings (estabished/established)

In the commentary below, we discuss algorithms that are effective for answering Jeopardy questions and propose how to exceed that level of performance by encoding deep semantics related to the questions. |

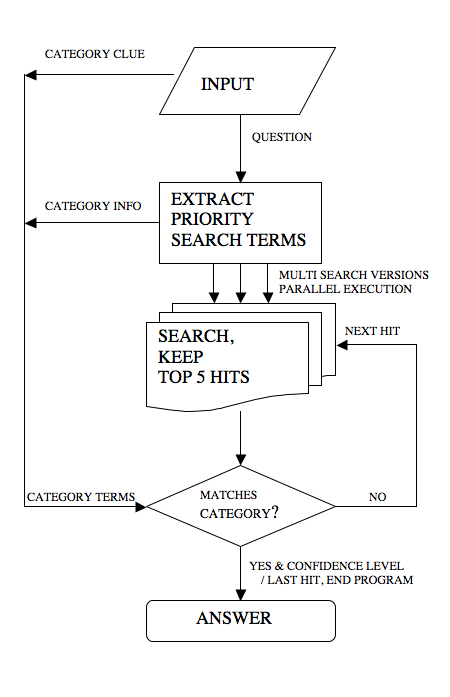

Algorithms for Answering Jeopardy Questions

Other important algorithms

Two Phase Text Match Method

Two Phase Text Match Method

from the question.

Prioritization helps evade misleading and irrelevant terms.

Common placeholders:

Game Analysis

|

Day 1 video part 2 text archive commentary

Day 2 video part 2 text archive commentary Day 3 video part 2 text archive commentary CONCLUSIONS PBS Nova: The Smartest Machine on Earth

Day 1 video part 2 text archive

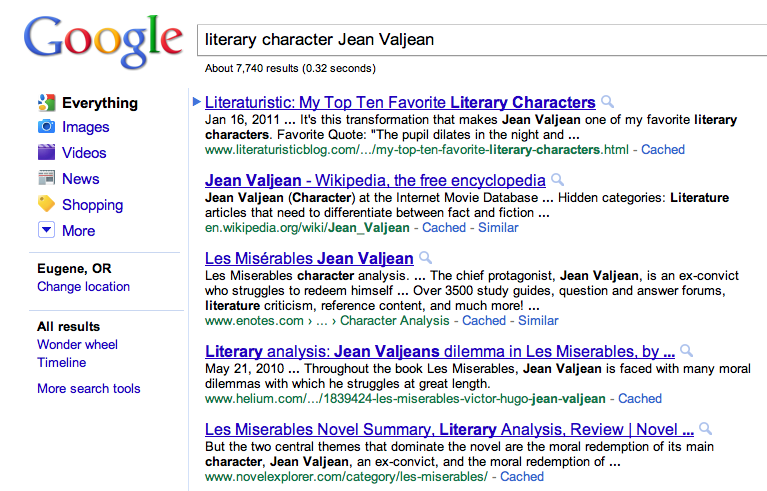

This question lends itself to the two phase Jeopardy Algorithm we describe; search for quoted phrase "Les Miserables" and verb phrase "stealing a loaf of bread" immediately retrieves Jean ValJean. Watson won this question, the 18th of the first Jeopardy round televised February 14, 2011.

Solution: Use two-phase text match algorithm 1. Extract Priority Search Terms

2. Extract Category

3. Perform Search Phase 1. Keep top 5 results: "Les Miserables" stealing a loaf of bread

4. VALIDATE ANSWER: #1: Jean Valjean

Computing Deep Semantics of the Les Miserables QuestionVictor Hugo's novel, Les Miserables, entrains deep philosophical themes of social justice and morality. At DigitalThought, we are tackling the ambitious task of creating a wise, ethical and compassionate computer capable of understanding human nature and situations. DigitalThought represents the world as a interaction of entities. Active agents entities have a set of differentially prioritized motivations (directives, or obligations) which lead them to perform actions to achieve their goals. Consider the interaction of two characters, Valjean and Javert: Valjean - steals a loaf of bread to feed starving children. Caught, served 19 years hard labor. Embittered by prejudice against ex-convicts, returns to stealing, but converted by example of kind priest who forgives his thievery. He reforms, becomes a successful businessman and then mayor. He is doggedly pursued by law-enforcement officer Javert. Javert - incorruptible, dogmatic, committed, legal absolutist, like a robot. Could not transcend programming, handle exception. Committed suicide when he realizes that Valjean is actually admirable and does not deserve his persecution. Couldn't reconcile extenuating circumstances with strict adherence to the law. Evaluate input: understand APB

The computational basis of the humor is a mild category violation, APB normally refers to an actual human, wheras in this instance, the targeted agent pertains to a fictional realm. idiom: $subject $be wanted for $committing? $crime APB: communication, law enforcement agents, apprehend agent literary character is fictional context if X has stolen Y, X has committed theft owner of Y, no longer in possession of Y Y motivated by family children, hunger, class animosity (revenge, inflict suffering on priviledged to reduce Y's perceived lack, lack of opportunity

Literary Characters APB: His victims include Charity Burbage, Mad Eye Moody & Severus Snape ... This question was biased against Watson. The answer entailed human style cognition and was not solvable by straight text lookup methods. Character names like Severus Snape and Mad Eye Moody uniquely allude to the plot of Harry Potter, but there, search engine techniques face a dead end. First, the identity of victim must be applied to each member of a distributive, non-exhaustive list. The history of each character must be reviewed to find the nature of their misfortune (robbery? turned to frogs?) and who was the common perpetrator. All were apparently killed by or at the behest of Voldemort. The clue "He'd be easier to catch if you'd just name him" is mostly noise to a search engine, but a instant giveaway for an associative mind which understands that Voldemort's name was so feared it became unmentionable. With no verbatim link between the clue and answer, Watson did well to use the pronoun "his" to select only male characters in Harry Potter. Inference of gender from name or context is a task in itself. The correct answer, Voldemort was Watson's second choice reply with 20% confidence.

BEATLES PEOPLE was computationally the easiest set of questions in the initial Jeopardy round. Watson answered all 5 correctly, winning 4 of 5. Answer procedure required text matching between Beatles lyrics and the clue; extracting the lyrics word holding the same relative position as the word "this" or other pronoun in the clue. Ex: $200 "And anytime you feel the pain, hey" this guy "refrain, don't carry the world upon your shoulders". Answer (Watson): Who is Jude? (baby sings Jude) Watson did a good job distinguishing between animate and inanimate subjects, answering "Who is Lady Madonna?", but "What is London?". How did Watson know that "Jude" was a person? Perhaps tipped off by question asking for "this guy". Watson and smart computers need a database somewhere that designates the categories of terms, for instance that "guy" as well as king, queen, doctor, president, and lady are generally expected to be human. Otherwise, a great deal of mining and computation is needed to infer status from various references, relationships, and actions. Jeopardy judges let Watson get away with a technical error for answering "What is Maxwell's silver hammer" when the Beatle's people category asked for a person's name -- "Who is Maxwell?" Beatle's Wisdom: All you need is love, Imagine, Hey Jude, Take a sad song and make it betteri, Here comes the sun

Olympic Oddities: The anatomical oddity of George Eyser Watson was robbed on this question. After correctly selecting George Eyser's leg as the most anatomically related aspect of his record, judges denied credit because Watson didn't specify that the leg was missing. Eyser had a partial leg and competed with a prosthetic [wikipedia] so that judgement was debatable. It would be interesting to know to what degree Watson, who is being groomed for medical consulting applications, is cognizant that a missing leg constitutes a physical anomaly.

Olympic Oddities: A 1976 entrant in the "modern" this was kicked out for wiring his epee to score points without touching his foe Following the algorithm of extracting proper nouns, dates, and verb phrases produces a search for 1976 olympics epee wiring. This immediatley retrieves a relevant file. Fill in the blank technique focuses on placeholder pronoun "this", equating modern this to modern pentathalon. Answer: pentathalon.

Watson did well filling in the blank of Beatle's song lyrics, and matching dictionary definitions of stick as a part of a tree and as the verb meaning puncture, but again this was achievable with text string matching and fill in the blank algorithms; a mastery of the nuance of language was not required.

A Google search for Rembrandt's Storm on the Sea returns the correct answer first, even when Rembrandt is misspelled!

OHIO, NOT SPAIN

A Goya stolen (but recovered) in 2006 belonged to a museum in this city (Ohio, not Spain) Watson ignored the negation of Spain, answering Madrid instead of Toledo; another error akin to offering Toronto as a US city

Perform algorithm

| ||||||||||||||||||||||||||||||||||||||

|

|

|

|

Category | Final Jeopardy Question |

| 19th century novelists | William Wilkinson's "An Account of the Principalities of Wallachia and Moldavia" inspired this author's most famous novel |

|

|

| Finishing second, Jeopardy master Ken Jennings concedes defeat to "our new computer overlords " video | Answer: Who is Bram Stoker? |

| What author was inspired by Wilkinson's Wallachia and Moldavia? Many Jeopardy questions can be answered with a multi-stage search engine query. See algorithm below. |

|

| Question requests "this author". Answer verification search: Bram Stoker = author? |

|

CONCLUSIONS

- The Jeopardy contest was fair; the proportion of questions favoring human vs machine cognition was balanced.

- The IBM team did a great job of producing a system which could evaluate options and answer a broad variety of questions in real time

- The parallel architecture that IBM is focusing on is ideal because numerous hypotheses about text meaning must compete and collaborate in order to forge an interpretation.

- Superficial text matching approaches to language processing are nonetheless sufficient to answer most questions

- GRAMMATICAL ANALYSIS DEMONSTRATED? - "The Hedgehog and the Fox" is an essay on this Russian count's view of history by the liberal philosopher Isaiah Berlin NOT AUTHOR, TOLSTOY

- Heitor Villa-Lobos dedicated his "12 Etudes" for this instrument (guitar) to Andres Segovia 12/composer = Lobos dedicated_to

- One can answer most Jeopardy questions with only about 5 algorithms

- Knowledge of categories is important; can be inferred from many text examples

- gender indicators, '20s date abbreviations, initial letters, rhymes, contains string church, state

- extract subject of possessive

- CATEGORIZE: Russian count = human. US city = place

- VERIFY ANSWER. Reinsert candidate answer is question to verify

- PROPER RELATIONSHIP - not always retrieving composer; sometimes instrument, sometimes author,

- author=source. owner possessive

- Most impressive: identify leg as George Eyser's anatomical oddity, not a text match feasible Q. distinctive feature.

- Questions Likely Favoring Watson

- answer contains unique combination of terms specified in the question. Ex:

- famous quotations and distinctive phrases:

- What's right twice a day? Clock.

- What's horse designed by committee? Camel

- What has 736 members? EU parliament

- Lookup and rank - what's the northernmost of 3 cities? Lookup coordinates, sort by latitude. Watson probably had a custom app for this. (Miles Obrien demo competition 10:59/21:40)

- Questions Likely Difficult for Watson

- Questions requiring semantic comprehension

- Questions demanding physical world knowledge

- Questions requiring emotional and moral reasoning

- Questions with short text clues -- search too broad for automated approach

- Questions whose answers are not explicitly stated and must be inferred

- Questions diluted with mostly irrelevant terms

- Questions involving negations

- Questions which share no common vocabulary with the answer

- Questions with common words -- chicken dish recipes (Miles Obrien demo round 12:55/21:40)

- Questions demanding physical world knowledge

FUTURE WORK PROPOSAL

- INCREASE LANGUAGE COMPREHENSION PROFICIENCY

- Level 1: Pattern recognition and processing

- Level 2: Inference from syntax

- Level 3: heuristics, adjective processing, predicate evaluation

- Level 4: internal conceptual modeling

- Level 5: adept processing with domain expertise

- DEVELOP KNOWLEDGE RECORDING CAPABILITY - add learning capability to question answering system

- IMPROVE QUESION ANSWERING

- cite sources

- declare reasoning process

- Future work: natural language knowledge recording, source citation, semantics, syntax

- Jeopardy is an engaging forum in which to test AI systems

- Watson should cite his sources

- declare reasoning process

- develop a more sophisticated internal representation of meaning to handle more probing questions

- explain the basis of the humor or viewpoint

- Need system transparency and test of values, wisdom in order to avoid HAL scenario

- deep semantics, human situations and values. why is the case of the olympian remarkable? Beause he overcame adversity of loss of leg.

- language choice guidance -- out of the ordinary. Positive connotation - remarkable, extraordinary, negative - strange, odd; clinical - deviation, anomalous

- DEEP SEMANTICS, COMPUTER PHILOSOPHER

- need to cultivate wise, moral computers to avoid HAL scenario

- condensed ontology nucleus - ground concepts, enable scaling, avoid ad hoc rules

- human nature, human condition

- meaning - deprecated - cheating, murder; admired - overcoming adverstiy

SUGGESTED APPLICATIONS FOR MASSIVELY PARALLEL SYSTEMS

- Assistance, enhancement and support, not replacement of humans

- Diagnostics - correlate complex symptom prfile with diagnosis and treatment

- Invention - Analyze patented and published methods to develop new solutions for problems

- Mutual benefit transaction design - mediation, negotiation -- analyze goals and assets of parties and generate optimal solution for all

- Decipher the genetic code, cure diseases, aging

- AI for autonomous machines (space and ocean exploration, household maintenance assistance, dangerous apps) - track cognition with real-time world modeling

original intro

IBM System overview

Slate ezine: Ken Jennings comments about competing against Watson in Jeopardy

visual thesaurus -- How Watson Trounced the Humans -- Ben Zimmer

Liz Liddy, Syracuse iSchool dean comments blog

Final Jeopardy - Stephen Baker It wasnt just that the computer had to master straightforward language, it had to master humor, nuance, puns, allusions, and slang

Nova

Congressman beats Watson

Shakespeare TEacher Ferruci - How Watson Works

Watson vs. Google data mining RIT

Tech News Daily

quora transcript

Jeopardy match had out-takes

Jeopardy question statistics

-->

-->